When we first implemented FullStory analytics on one of our products, our goal was simple: collect behavioral data that could give us a clearer picture of how users were experiencing the product. Like many teams starting out with new tools, we began by producing basic reports — page load times, 404 errors, rage clicks, dead clicks, and trashing (how FullStory refers to erratic mouse movements).

Initially, these reports were something we pushed out to product managers and engineers. They were useful snapshots, but not yet embedded into our product decision-making cycle.

Over time, something interesting happened: demand for these reports grew. Product managers began asking for friction reports proactively. They weren’t just glancing at the data — they were diving into session replays, connecting quantitative signals to real customer experiences, and then logging bug and enhancement tickets to address issues.

This shift was more than just a nice outcome of a new tool. It was an inflection point in our UX research maturity.

Why This Works

The success of our friction reports came down to three things:

- Actionable Metrics

By focusing on signals of friction — lag, errors, rage clicks, dead clicks, and thrash — we gave teams a tangible way to spot usability pain points. These weren’t abstract survey results; they were measurable, observable behaviors directly tied to the user journey. - Connecting Data to Stories

Numbers on their own can feel distant. But pairing friction metrics with FullStory session replays created a bridge. Product managers could see the frustration unfold. That emotional resonance sparked urgency — leading to tickets, fixes, and improvements. - Operationalizing Distribution

Instead of “nice-to-have” reports that lived in inboxes, we established a rhythm for sharing insights and made it easy for stakeholders to access the information. Over time, this shifted perception: the reports were no longer outputs of UX research; they became inputs for product decision-making.

The Bigger Impact on UX Research Maturity

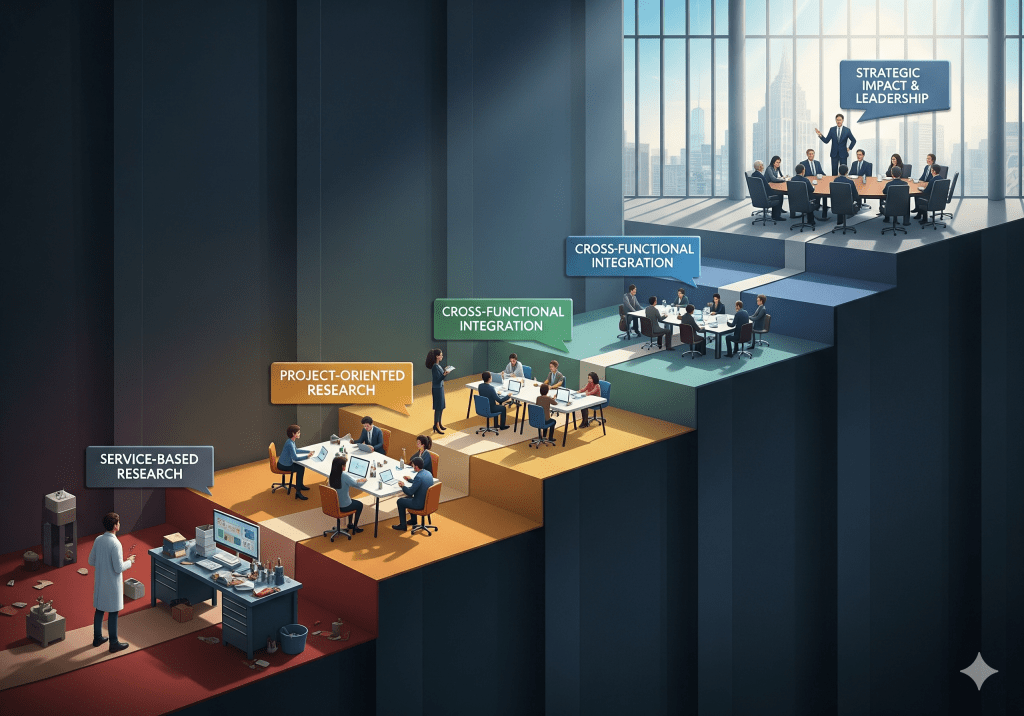

One of the most powerful lessons here is that how research is managed and distributed matters just as much as what the research uncovers. By treating quantitative behavioral data as a shared operational resource instead of a siloed deliverable, we moved from:

- Pushing insights → to receiving requests

- One-off reporting → to ongoing product workflows

- UX as observers → to UX as operational partners

This kind of shift doesn’t just solve bugs faster. It demonstrates the value of UX research in driving outcomes, builds credibility across functions, and ultimately strengthens the organization’s openness to deeper research practices.

What’s Next

Our next step is to continue refining how these reports integrate with product workflows — automating parts of the process, tagging issues by severity, and connecting friction signals to broader metrics like retention and support costs.

For me as a research leader, this journey has reinforced an important truth: UX research maturity grows when insights become operationalized. Tools like FullStory provide the raw data, but it’s the thoughtful curation, distribution, and embedding of those insights into decision-making that makes research indispensable.

👉 Have you used behavioral analytics tools like FullStory, Glassbox, Google Analytics or Adobe to advance UX maturity in your org? I’d love to hear how you’ve operationalized your insights.

Leave a comment