The modern enterprise runs on collaboration. Yet, in complex, high-stakes environments like managing critical data infrastructure or large-scale software development, true collaboration often faces significant friction. Siloed teams, disparate tools, and the sheer volume of information can turn collective effort into a fragmented struggle.

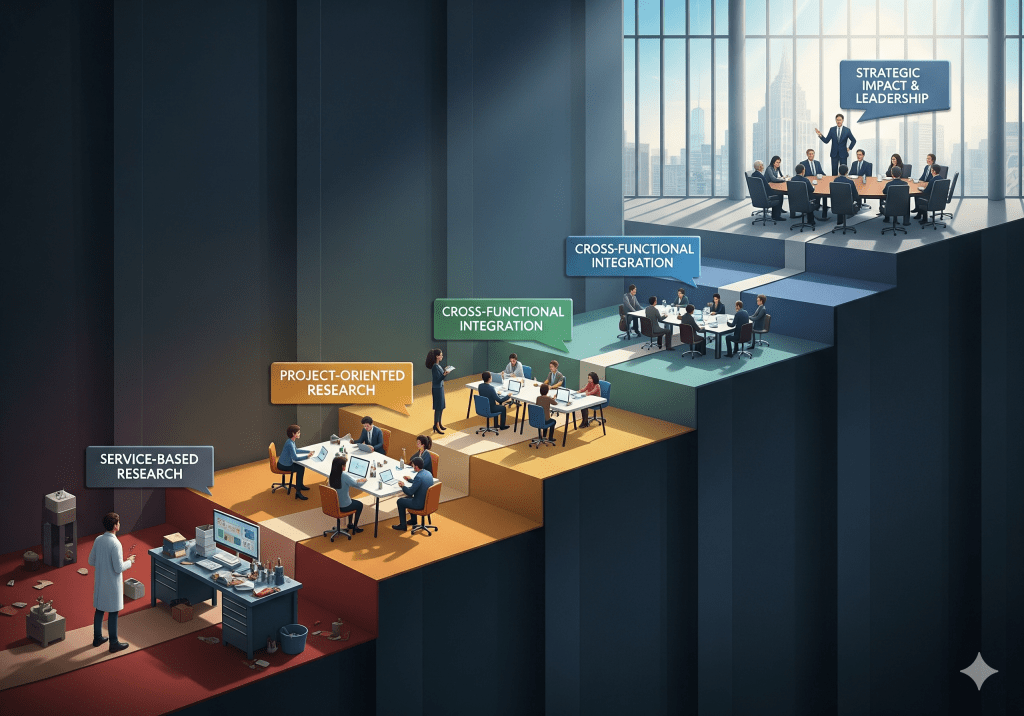

Recently my team sought to understand how various cross functional IT teams collaborate to manage data security and compliance. Our discovery research unveiled a mosaic of nuanced collaborative processes, shared but often divergent work languages, and pervasive frustrations around fragmented information and governance procedures. These insights were foundational for new product development, highlighting a clear imperative: we need smarter ways for teams to work together, not just faster.

This is where AI-augmented collaboration becomes not just a buzzword, but a strategic imperative. Empowering development, IT, and business teams to achieve their best work means leveraging AI to enhance, not replace, human connection and efficiency. Our role in UX Research is critical in ensuring this augmentation is not only effective but also trusted, intuitive, and truly collaborative for users of this technology.

1. Researching the “Team-Level” Impact of AI: Beyond Individual Efficiency

Many discussions around AI focus on individual productivity gains—how a single user can complete tasks faster. However, in enterprise environments, the real value of AI lies in its ability to amplify team output and cohesion. The focus must shift from individual efficiency to collective efficacy.

The research that my team conducted consistently revealed that collaboration isn’t just about sharing knowledge; it’s about shared understanding, coordinated action, and seamless hand-offs. An AI feature, for example, that summarizes complex system logs might save an individual infrastructure engineer time, but the true benefit emerges when that summary enables a security engineer to quickly grasp the context, or helps a data engineer identify downstream impacts without extensive manual investigation.

Our research methodologies must evolve to capture these multi-user, multi-context interactions. This involves:

- Observational studies of teams using AI-augmented tools in their natural workflows.

- Journey mapping that integrates AI touchpoints into existing collaborative processes.

- Qualitative interviews that probe the psychological impacts of AI on team dynamics, trust, and shared accountability.

The goal isn’t just to make individual tasks faster, but to make the entire collaborative system more intelligent, resilient, and effective.

2. Building Trust and Transparency in AI: The Foundation of Adoption

One of the most significant barriers to AI adoption in critical enterprise functions is a lack of trust. This isn’t just a “nice to have”; it’s a fundamental requirement, especially for technical users like storage engineers. As I’ve experienced, trust is paramount to building brand loyalty and ensuring the widespread adoption of new technologies.

My research into automation features provides a clear blueprint: users need to understand how information is gathered and analyzed. When designing and researching “smart” features, we found that providing access to logs, detailed timelines, and underlying data points was not merely a feature request, but a core component of building confidence. This transparency allows technical users to validate automation, debug potential issues, and, critically, develop conviction in the system’s reliability.

For AI-augmented collaboration, this translates to:

- Explainable AI (XAI) Principles: Users must understand why an AI tool made a suggestion, summarized information in a particular way, or flagged a specific issue. This might involve highlighting source documents, confidence scores, or the parameters used by the AI.

- Controllability and Overridability: Technical users, especially, need the ability to review, edit, and override AI suggestions. This ensures they remain in control and feel empowered, not supplanted.

- Clear Delineation of AI vs. Human Input: Clearly indicating what content or action was AI-generated versus human-generated prevents confusion and preserves accountability.

As UX Research practitioners we lead the charge in defining what “trustworthy AI” means for their specific user base, translating abstract principles into actionable product requirements and measurable outcomes.

3. Quantifying the Impact of AI on Collaboration

Our roles in UX Research extend beyond generating insights; our role is also about demonstrating tangible impact on business outcomes. For AI-augmented collaboration features, this requires a robust, continuous measurement strategy.

My work has emphasized the power of UX benchmarking as a methodology for iterative improvement. As detailed in my article, “Driving Product Enhancements Through UX Benchmarking: A Methodology for Iterative Improvement,” establishing clear baselines and tracking key performance indicators (KPIs) over time allows us to quantitatively measure the success or failure of new features and iterate based on data.

For AI-augmented collaboration, relevant KPIs might include:

- Time to Resolution/Completion: For shared tasks, has AI reduced the time it takes for a team to resolve an incident or complete a project phase?

- Reduction in Cross-Functional Handoff Errors: Is AI reducing miscommunications or errors during transitions between cross functional IT teams?

- Increased Knowledge Sharing: Are teams leveraging AI-generated summaries or insights more frequently, leading to higher rates of internal knowledge consumption?

- User Confidence and Adoption Rates: Tracking sentiment specifically related to AI features, alongside their usage frequency, to ensure they are being embraced rather than avoided.

- Perceived Collaboration Efficiency Score: A custom survey-based metric capturing users’ subjective experience of how efficiently their team collaborates with AI’s help.

By continuously benchmarking these metrics, UX Researchers can articulate the ROI of AI investments, proving how they contribute not just to better user experience but to stronger team performance and, ultimately, more effective business operations.

Conclusion

The future of work in enterprise organizations is undeniably AI-augmented. As UX Research practitioners, we stand at the forefront of this evolution, not just observing, but actively shaping how AI integrates into the human-centric workflows of complex technical teams. By focusing on team-level impact, prioritizing transparency to build trust, and meticulously quantifying outcomes, UX Researchers ensure that AI truly serves to amplify human potential, fostering more connected and productive teams across the enterprise.

Leave a comment