I keep seeing the same pattern in agentic AI demos:

- It looks magical.

- It feels fast.

- And then someone asks the question that actually matters:

‘Cool… but what happens when it’s wrong?’

That question is the pivot point for UX Research, Design, and Product.

Agentic systems don’t just inform users—they can act. And once software can plan and execute on a user’s behalf, the biggest UX risk is no longer confusion, friction, or even usability.

The biggest UX risk becomes unchecked action.

The shift: from interaction to delegation

Most UX instincts were shaped in a world where users do the work.

Click here. Configure this. Confirm that. Repeat.

Even in complex products, the user is still driving.

Agents change the driving model.

Users don’t want to execute steps—they want to hand off goals:

- “Summarize what changed.”

- “Fix this.”

- “Keep this within budget.”

- “Make it safer.”

- “Get me from A to B without breaking anything.”

That handoff is powerful—and risky.

In agentic UX, we’re no longer just designing a path through an interface. We’re designing a relationship: who decides what, when, and with what safeguards.

The real north star: calibrated trust

Teams often talk about “building trust.” But for agents, maximum trust is not the goal.

- If users trust an agent more than it deserves, they’ll accept actions blindly.

- If they trust it less than it deserves, they won’t delegate at all.

What we actually need is calibrated trust.

Users should trust the agent exactly as much as its behavior, reliability, and guardrails justify.

That’s not a messaging problem.

That’s a product design problem.

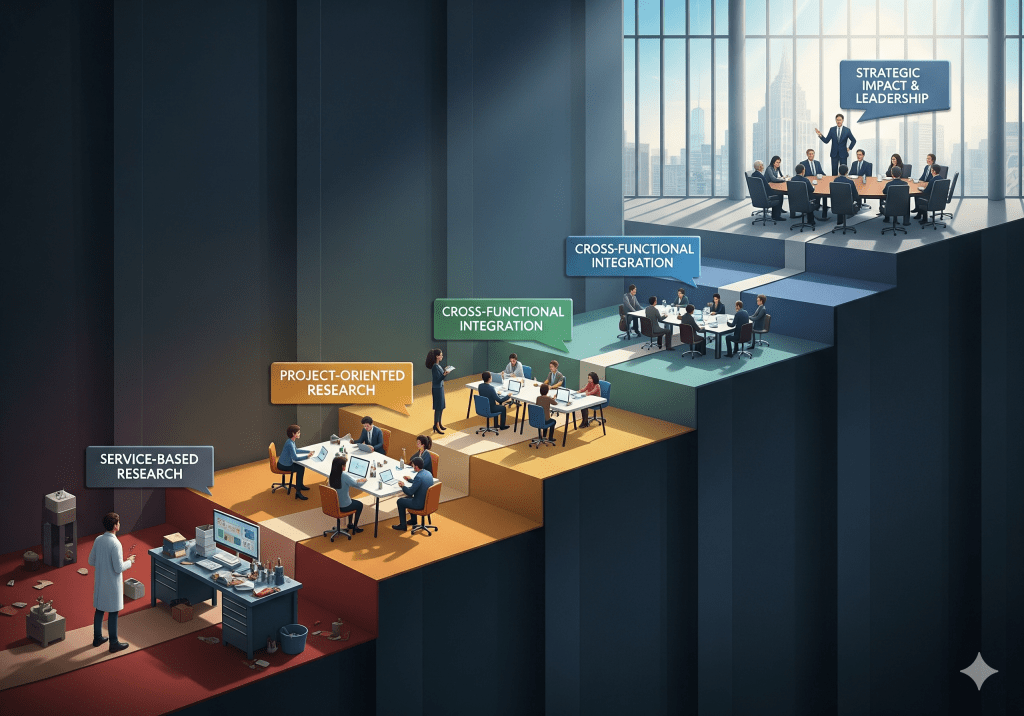

A practical way to talk about autonomy

When teams debate “how autonomous should it be?”, discussions often spiral into abstraction. I prefer a simple ladder that makes the decision concrete:

- Explain — “Here’s what’s happening.”

- Recommend — “Here are options and tradeoffs.”

- Draft — “Here’s a plan, draft, or diff for review.”

- Execute with approval — “I’ll do it after you confirm.”

- Execute & notify — “I did it; here’s what changed (and how to undo).”

- Autonomous within guardrails — “I act automatically inside boundaries.”

This does two important things:

- It gives UXR, PM, and Engineering a shared vocabulary.

- It turns trust into a product requirement—not a personality trait.

Trust expectations should be radically different at level 2 (“recommend”) than at level 6 (“act on my behalf”).

TRUST isn’t one thing—it’s a stack

When users say “I don’t trust it,” they might mean any of the following:

- “I don’t understand why it did that.”

- “I can’t predict what it will do next.”

- “I can’t stop it once it starts.”

- “I can’t undo it if it goes wrong.”

- “I can’t explain it to someone else.”

- “I don’t know what data it used.”

So it’s useful to think of TRUST as a set of interaction properties—things you can design, test, and ship:

- Transparency: show what it’s doing and why

- Reliability: be consistent; fail predictably

- User control: constrain, approve, override, stop, undo

- Safety & security: gate risky actions; respect permissions and policies

- Traceability: log actions so humans can reconstruct what happened

If any one of these collapses, trust collapses—even if the model itself is impressive.

The new UX question: can people supervise the agent?

Classic usability asks:

Can users complete the task?

Agentic usability asks something sharper:

Can users supervise the agent well enough to feel safe delegating?

That supervision has its own experience flow:

- Set goal

- Review plan

- Approve or constrain

- Monitor

- Correct

- Recover

- Learn how to delegate better next time

Many agent experiences fail here—not because the agent can’t do the thing, but because the human can’t confidently manage the doing.

What to research

If you’re building agentic AI, these research questions predict adoption—and prevent incidents:

1. Plan clarity

- Can users explain what the agent is about to do?

- Can they spot risks or missing constraints?

2. Oversight and control

- Do users know when they’re in charge vs. when the agent is?

- Can they easily steer, pause, or stop execution?

3. Recovery

- When the agent is wrong, how expensive is it to fix?

- Is there a clear undo path—or a panic spiral?

4. Trust calibration

- When do users accept too quickly?

- When do they overcorrect and refuse to delegate?

- What cues are they using to decide?

Answering these questions doesn’t just improve UX—it reduces product risk.

Metrics that matter more than “time on task”

For agents, I care less about shaving seconds and more about reducing regret. Measures that can be useful include:

- Delegation rate: what users hand off vs. do manually

- Intervention frequency: how often they step in mid-flight

- Correction cost: time, steps, or effort to fix mistakes

- Regret rate: “Would you delegate again?”

- Plan comprehension: can users predict what will happen next?

- Rollback confidence: do they believe they can undo—and can they?

If these look good, adoption follows. If they look bad, users quietly back away—even if the agent technically works.

A product rule I keep coming back to

The simplest principle I’ve found for aligning UXR and PM is this:

The more autonomy you ship, the more trustworthiness must behave like reliability engineering—measured continuously, not declared once.

At low autonomy (recommend or draft), you can learn quickly and iterate.

At higher autonomy (execute or autonomous), you need guardrails, logging, gating, and recovery paths before you scale.

Because once an agent acts, the user’s question isn’t:

“Was the UI easy?”

It’s:

“Can I live with what it just did?”

The takeaway

Agentic AI isn’t just a new interface.

It’s a new contract.

And the contract isn’t “the agent is smart.”

It’s this:

- I can understand what it’s doing

- I can control what it’s allowed to do

- I can stop it when I need to

- I can recover when it’s wrong

- I can explain what happened afterward

That’s what makes delegation feel safe.

That’s what makes trust durable.

That’s what turns a great demo into a product people will actually use.

Leave a comment